Learning to Detect Humans-Objects Interactions

Supervisor: Jia Deng | Co-author: Yu-Wei Chao, Xieyang Liu

Goal

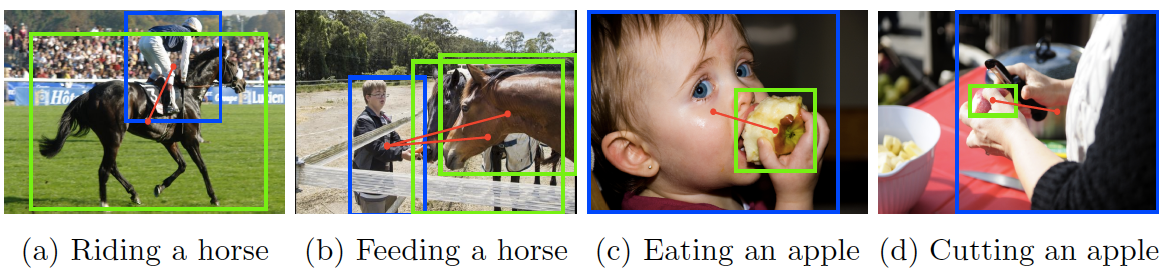

The goal of the project was to detect and label human-object interactions (HOI) in static images, instead of only focusing on either single object detection or action recognition. As show in the above figure, people are encircled by blue boxes and objects by green boxes. Each red line links indicate the interaction of desired category between people and objects. For more details, please check out our paper on arXiv and dataset HICO and HICO-DET available for research.

Method

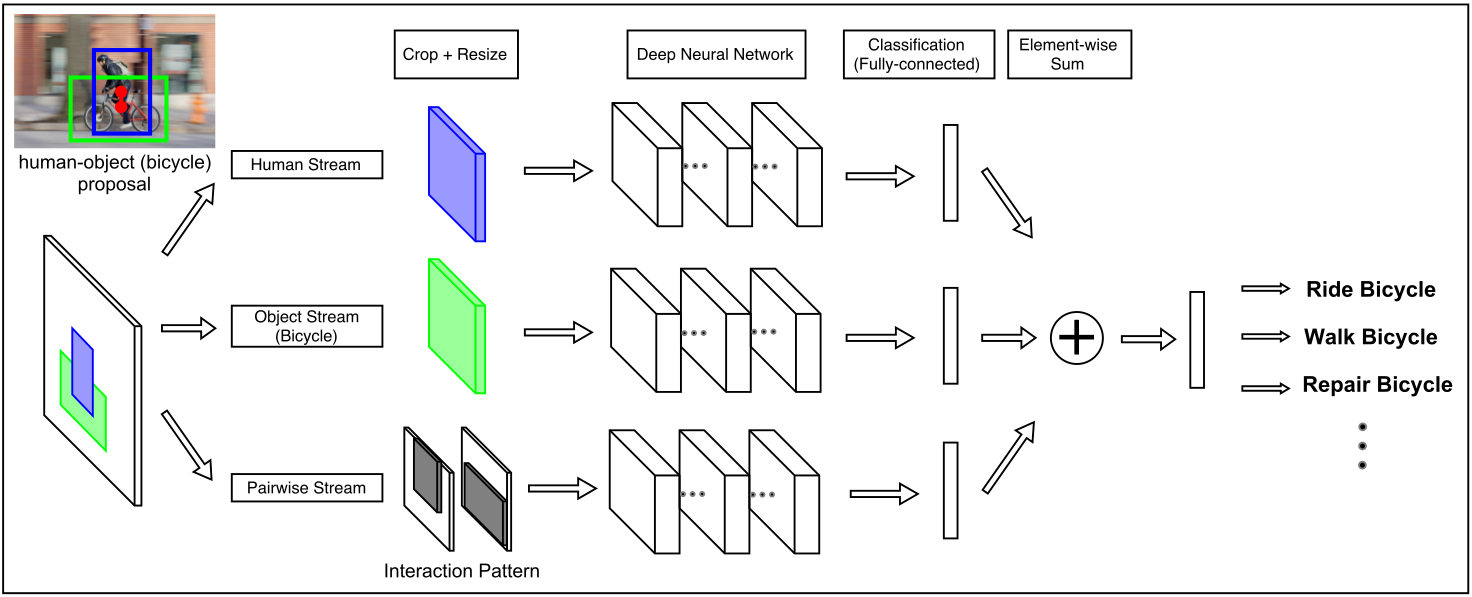

The goal is approached in two phases: human/object bounding boxes detection and HOI classification. In the first phase, we trained Fast-rcnn detectors with images containing 80 object categories (including human) and achieved the goal of human/object bounding box detection. For the second phase, we proposed a multi-streamed deep neural network to extract features from human pose as well as local object texture, and learn spatial relation from interaction pattern.

The most difficult challenge of the project lies in the extensive variation of relationship of human and objects in natural scenes. To tackle the variation, We investigated on the distribution of ground truth bounding boxes of human and objects and concluded that spatial relations of human and objects could be clustered in several main patterns. We then developed an input channel that included description of interaction patterns with the lowest granularity by ignoring the pixel intensities. Incorporating this interaction pattern channel to human and object streams boosted the HOI detection performance by more than 26.50% of mAP on HICO-DET.

Results

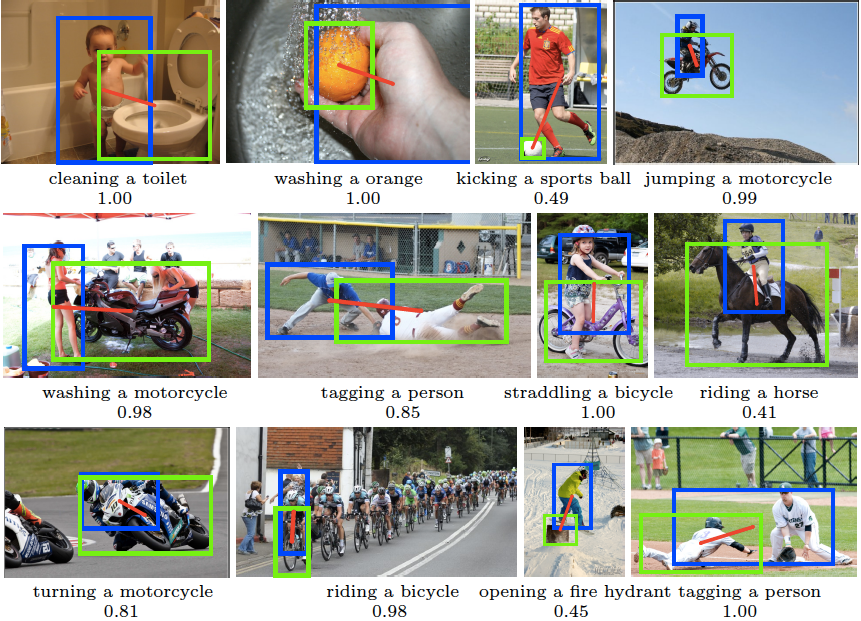

Some of the sample results are shown as follows (numbers are the confident scores generated by the network). This work has been submitted to CVPR 2017, and the source code as well as more detailed experiment result will be released upon acceptance. Stay Tuned!

Last updated on 2016/12/25